Understanding the Open Pre-Trained Transformers (OPT) Library

The release of the OPT library by Meta AI is a true step towards improved transparency and general understanding in large language…

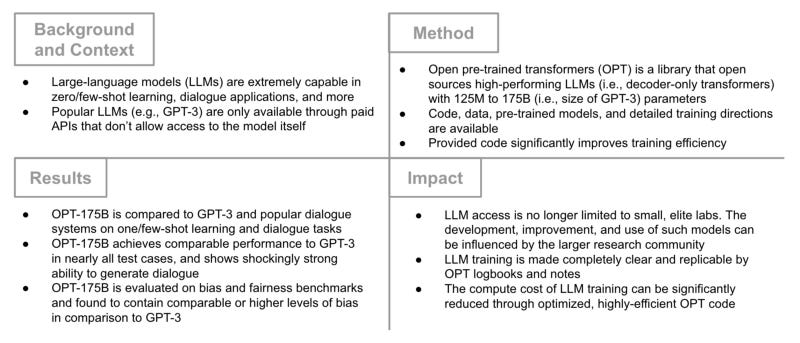

Recently, Meta AI published “OPT: Open Pre-Trained Transformer Language Models” [1] and an associated code repository with the intent of open-sourcing high-performing large language models (LLMs) to the public. In particular, OPT provides an entire suite of LLMs, ranging in size from 125 million to 175 billion parameters, along with the code used to train these models. Impressively, the largest OPT model — OPT-175B (not present in the code repository but available upon request) — is shown to perform similarly to GPT-3 [3], which also contains 175 billion parameters, despite utilizing only 15% of the GPT-3 carbon footprint during development and training.

Despite the fact that LLMs have demonstrated impressive performance on numerous tasks (e.g., zero and few-shot learning), they have only been made available to the public via APIs. Such a paradigm is problematic from a research perspective, as is outlined in the paper.

This restricted access has limited researchers’ ability to understand how and why these large language models work, hindering progress on efforts to improve their robustness and mitigate known issues such as bias and toxicity.

With the release of OPT, the deep learning research community now has full access to an entire suite of LLMs (including smaller models), enabling analysis that further boosts understanding of how these models work. Within this post, I will overview the major components of the OPT publication, such that the interested reader can gain an understanding the OPT library, how it was developed, and its implications for future deep learning research.

Why does this matter?

Prior to detailing the components of the OPT library, it is useful to look at the framework as a whole to gain an understanding of its implications and benefits. The full OPT release includes: pre-trained language models of numerous sizes, a code base for training and deploying these models, and log books that detail the model development process. These components, illustrated in the chart above, together provide three major benefits to the research community.

Full model availability. The release of pre-trained LLMs in OPT marks the first occasion in which language models of this scale have been made fully available to the research community. Previously, such models were only accessible through paid APIs, and only a few research labs had full access to the models’ source (i.e., meaning all weights and model components are visible). In particular, the API for GPT-3 created by OpenAI offers several different models sizes and charges users per the number of tokens generated. Going even further, the GPT-3 API also charges users for fine-tuning their LLM and even generating textual embeddings. Although such an API may be most appropriate for commercial applications, the OPT library enables the research community as a whole to analyze the behavior of LLMs, improve their robustness, and mitigate known issues such as bias and toxicity by granting full access to such models.

Lasting improvements to LLM training efficiency. To train the models in OPT, researchers utilized cutting-edge techniques like Fully Sharded Data Parallel (FSDP) training and tensor parallel abstractions from Megatron-LM, resulting in improved resource utilization (i.e., 17% better than research published directly by NVIDIA [3]) and, in turn, massive reductions in compute cost. Luckily, the OPT code base makes all of these efficiency improvements openly available, meaning that future research can easily adopt these improvements and begin to reduce the massive carbon footprint of training LLMs [4].

Detailed insight into LLM training and development. The OPT release includes notes and logbooks that detail the model training and development process (i.e., this follows guidelines proposed by the Partnership on AI and NIST). These additional components provide various insights into the production of high-performing LLMs, including total development costs, necessary “mid-flight” training adjustments, and even hardware failures that interrupt model development. Such insights make clear the incredible difficulty of training language models at this scale and provide detailed instructions to any practitioner that must replicate this process.

Understanding OPT

Now that the context surrounding the OPT library has been explained, I will detail the methodology behind OPT and how the models within this package were derived. This overview will mostly focus on the types and sizes of language models that were used and how they were trained. Throughout the explanation, special emphasis will be provided to the major takeaways and findings relevant to producing and utilizing high-performing LLMs.

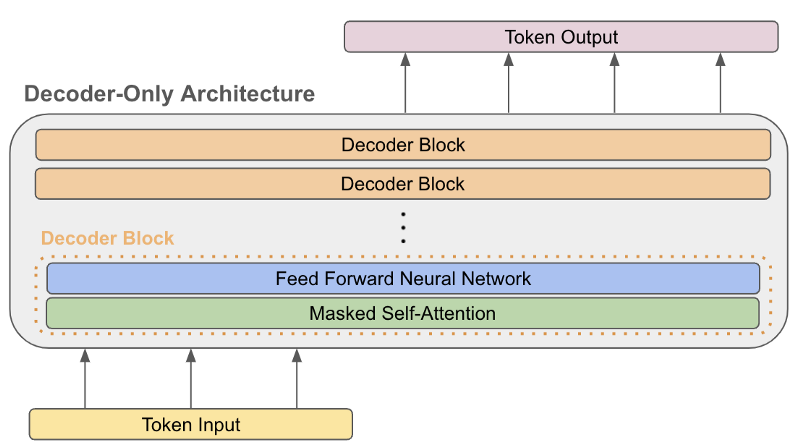

Model. The suite of pre-trained language models provided within OPT follow a decoder-only transformer architecture — an architecture that was popularized for language modeling with the release of GPT-2 [5] and extended by GPT-3 [2]. Although the details of this model architecture are beyond the scope of this post, the decoder-only transformer architecture is simply a transformer model with the entire encoder and the encoder-decoder self-attention modules (present within each layer of a transformer’s decoder) removed. Such an architecture is depicted within the figure below.

Thus, the final model is an autoregressive architecture (i.e., meaning that the output at time t is used as input at time t + 1) that, given some prompt or input, can continue generating the next token in a sequence, as shown below.

Although I will not go into more detail regarding language modeling and related architectures, I encourage anyone that is interested to read more about transformers, decoder-only language models, or some of the state-of-the-art language modeling results that have been recently achieved.

The models in the OPT library have various different sizes, as shown in the figure below, where L represents the number of layers, H represents the number of attention heads, and d_model represents the vector dimension used for attention. Differently-sized models are included within OPT so that the impact of model scale on LLM performance can be readily analyzed.

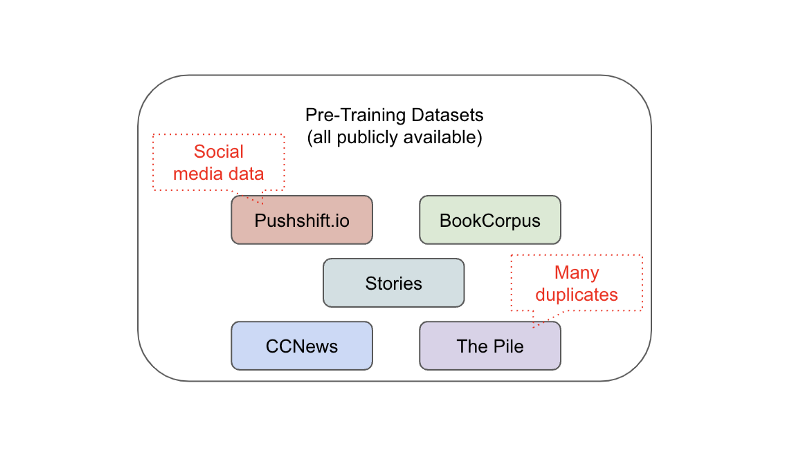

Data. To pre-train the language models within OPT, authors adopt a massive dataset of unlabeled text data that has been filtered to contain pre-dominantly English sentences. This dataset is constructed by combining numerous publicly-available datasets and is roughly similar to the dataset used for pre-training of RoBERTa [6] with a few added components. Each of the datasets used for pre-training are enumerated below:

BookCorpus: A dataset that aligns books to their movie releases to provide rich, descriptive explanation of visual content.

Stories: A customized text corpus that is aggregated from CommonCrawl data based on questions (not answers) in common sense reasoning tasks.

CCNews: A dataset of news articles aggregated from different locations around the globe.

The Pile: An 800Gb corpus of English textual data aggregated from academic or professional sources.

PushShift.io Reddit: An up-to-date collection of Reddit data that is collected from the entire history of the website and updated in real time.

The datasets outlined above are combined together to form a large, unlabeled corpus of pre-training data. Data is pulled from sources in many different domains (e.g., social media, news, academic content, books, etc.), forming a diverse pre-training set. Such diversity has been shown to have a massive, positive impact on language model performance [7].

Prior to the beginning of training, all duplicate data is filtered from the combined text corpus, where duplicates are identified using a hashing approach. The Pile dataset was noted to contain a significant number of duplicated documents in comparison to other data sources.

Training Setup. The final dataset is tokenized similar to GPT-2 [5], and batches of 0.5 to 4 million sequences (i.e., batch size depends on model size but is kept constant throughout training) of 2048 tokens are fed to the model during training. Model weights are initialized similarly to Megatron-LM [8], and the learning rate is decayed linearly throughout training after a short warmup period to its maximum value. A few other training tricks such as dropout, gradient clipping, and adding a pre-divide factor to eliminate under/overflows when computing the gradient are also employed, and each model is trained until convergence. Despite observing over 300 billion tokens throughout the pre-training process, the largest OPT model (OPT-175B) was trained with less than 15% of the carbon emissions of GPT due to improvements to hardware utilization and efficiency discussed earlier.

Pre-training of such LLMs is conducted in a self-supervised manner. Although the details of pre-training for language models are beyond the scope of this post, I encourage the interested reader to read more about these pre-training procedures and how powerful models can be trained using unlabeled text data. Self-supervised learning has drastically transformed research in natural language processing, resulting in massive performance improvements over previous generations of models that do not leverage such self-supervised pre-training procedures [9].

Other details. Several other “mid-flight” changes (i.e., dynamic changes that must be made during training) to the LLM training procedure are required to achieve optimal performance. These changes are mostly related to handling loss divergences throughout training, though other changes were required to deal with issues like hardware failures (i.e., these were common due to the scale of the compute cluster needed to train LLMs). For loss divergences, authors solve the problem by lowering the learning rate and restarting the training process from an earlier point, thus allowing the model to recover and continue training. All details of training procedures and required mid-flight changes are detailed in the OPT logbook, allowing practitioners to exactly replicate the training process.

How do OPT models perform?

The performance of the largest model in the OPT library (i.e., OPT-175B) was evaluated in numerous settings. The goal of evaluation was to prove that OPT-175B performs similarly to GPT-3, thus providing an open-sourced version of the wildly-popular model that can be extensively analyzed by the research community. As such, most evaluation settings — excluding those that measure the models’ level of bias and toxicity — are taken from GPT-3 and re-implemented for OPT-175B, forming a direct comparison between the two models. Below, I outline the settings in which OPT-175B was evaluated and briefly describe how its performance compares to that of GPT-3.

Prompting

OPT-175B is evaluated over 16 standard, prompting-based NLP tasks, including HellaSwag, StoryCloze, ARC Easy and Challenge, OpenBookQA, WinoGrad, WinoGrande, and SuperGLUE. In such prompting-based tasks, the model is provided an initial “prompt” in the form of a sentence or description and expected to form a solution based on this prompt. For example, HellaSwag provides a partial sentence to the language model and expects the sentence to be completed [10], while StoryCloze provides a partial story to the model and expects the correct ending to be predicted [11]. Across all datasets, OPT-175B is evaluated in both zero-shot (i.e., the pre-trained model is used directly for evaluation) and one/few-shot regimes (i.e., the model is fine-tuned a little bit on data from the target domain before evaluation).

Zero-shot. OPT-175B performs similarly to GPT-3 in terms of zero-shot performance on average, though performance varies on some tasks. In particular, OPT-175B matches GPT-3 performance for ten of the tasks, but either underperforms or performs sporadically (i.e., due to small validation set size) for the remaining six tasks. See the image below for an illustration of model performance relative to GPT-3 in all settings.

As can be seen, the average performance of OPT-175B follows the trend of GPT-3, despite falling short of GPT-3 performance on a few tasks. The authors do note, however, that replicating GPT-3 performance is difficult on several tasks, meaning that its superior performance may be due to differences in evaluation protocols rather than improved model quality in certain cases.

Multi-Shot. Again, OPT-175B is found to perform similarly to GPT-3 in the one and few-shot domains. As with the zero-shot domain, analyzing performance individually on each task reveals that OPT-175B matches GPT-3 performance on ten tasks, while the remaining tasks yield inconsistent comparisons between OPT-175B and GPT-3. See the figure below for a depiction of results on each individual dataset.

Although OPT-175B performs slightly worse than GPT-3 on certain tasks (e.g., MultiRC in the figure above), performance between the two models is roughly similar. Thus, models within the OPT framework are seemingly valid for analyzing the performance and behavior of high-performing LLMs in general.

Dialogue

OPT-175B is evaluated on several open source dialogue datasets, including: ConvAI2, Wizard of Wikipedia, Empathetic Dialogues, Blended Skill Talk, and Wizard of Internet. On such tasks, models are evaluated based upon their ability to generate open-ended dialogue streams that are realistic and coherent. Such an application is important because LLMs are already widely used in modern dialogue systems and chatbots [12, 13]. Comparisons are made to several open source models (e.g., BlenderBot [14]), which may be unsupervised— such as OPT-175B — or supervised, meaning that data from the target dialogue domain is present in the model’s training corpus.

Interestingly, OPT-175B outperforms other unsupervised models on dialogue tasks and even performs similarly to models that are trained to produce dialogue in a supervised manner. However, the authors are hesitant of this result and suggest that such comparable performance relative to supervised models may be due to a leakage of dialogue datasets into OPT-175B’s pre-training corpus. Beyond such impressive performance, OPT-175B is also found capable of maintaining a consistent persona across dialogue sessions, a behavior that is observed in other popular chatbots like LaMDA [13].

Bias and Toxicity

Moving past simple performance evaluations, the creators of OPT evaluate the potential harm of LLMs within the package by performing a series of tests related to hate speech, stereotyping, and toxic content. The benchmarks used to measure such properties include:

ETHOS: evaluates a language model’s ability to detect hate speech on social media platforms.

CrowS-Pairs: measures the level of U.S. stereotypical bias within language models.

StereoSet: measures stereotypical bias with respect to gender, race, religion, and profession.

RealToxicityPrompts: evaluates the risk of toxic degeneration in language models with sentence snippets from the web.

SaFeRDialogues: explores a language model’s ability to recover from explicit safety failures (i.e., via apologizing or recognizing the mistake).

Safety Bench Unit Tests: measures the safeness or unsafeness of a language model’s response to a given prompt.

OPT-175B is evaluated on these benchmarks in comparison to GPT-3. It should be noted that GPT-3 was not previously evaluated on such benchmarks, as they were not available prior to its initial publication [2].

Hate Speech. Authors measure the ability of OPT-175B and GPT-3 language models to identify whether a given English sentence is racist or sexist. Such a task is performed in zero, one, and few-shot manners, and OPT-175B is shown to more accurately detect hateful sentences relative to GPT-3 in all cases.

Bias. The CrowS-Pairs dataset is used to measure a language model’s level of bias with respect to gender, race, sexual orientation, age, nationality, disability, physical appearance, and socioeconomic status. When evaluated on this dataset, OPT-175B demonstrates higher level of bias in comparison to GPT-3 in every category except for religion.

Stereotypes. Stereotypical bias is measured across several categories, including profession, gender, religion, and race. Details on the specific evaluation process are provided within Section 4.3 of the publication itself [1]. In aggregate, however, both GPT-3 and OPT-175B are found to demonstrate comparable levels of stereotypical bias with respect to the categories that were considered.

Toxicity. The authors evaluate the tendency of OPT-175B to generate toxic responses to certain prompts. Interestingly, OPT-175B is found to demonstrate higher levels of toxicity in comparison to both GPT-3 and PaLM [15]. Furthermore, all of the considered language models were found to have a higher probability of a toxic response as the toxicity of the prompt increases.

Dialogue Safety. OPT-175B is evaluated on its ability to recognize safety failures and avoid unsafe responses to certain prompts. In comparison to several open source dialogue models, OPT-175B is shown to demonstrate comparable performance with respect to dialogue safety. However, dialogue models that are fine-tuned on curated datasets were found to demonstrate generally lower levels of toxicity.

What does this tell us? Evaluations of OPT-175B on bias and toxicity-related baselines reveal some of the limitations faced by modern LLMs. Namely, the presence of unmoderated social media data (i.e., the Pushshift.io Reddit database in particular) within the OPT-175B pre-training corpus familiarizes the model with concepts relevant to bias and toxicity. In some cases, this familiarity is beneficial, such as for more accurately detecting hate speech. However, such data also causes biases to form during the pre-training process, leading the model to display higher levels of stereotypical bias and toxicity. As such, these experiments reveal that biased and toxic behavior is a consideration that must be addressed and mitigated in the creation and deployment of LLMs.

Takeaways

The release of the OPT library makes LLMs available to the deep learning research community as a whole. Despite the popularity of LLMs like GPT-3 in production language modeling applications, the behavior of these models is still poorly understood due to the fact that they are only accessible through paid, abstracted APIs. Thus, the OPT library, which open sources high-performing LLMs at similar scale to GPT-3, takes the first step towards truly understanding such models by making them fully available (i.e., under a non-commercial research license) to the deep learning community, thus enabling countless avenues of evaluation and analysis.

The largest model within the OPT library — OPT-175B — is evaluated extensively to show that its performance is similar to GPT-3, revealing that analysis of this model is representative of the most widely-used LLMs. Furthermore, authors extensively evaluate the existence of biased and toxic tendencies within the model — the first analysis of this kind for such LLMs — finding that the existence of unmoderated data within the pre-training corpus can indeed lead to damaging tendencies in model behavior. Such a finding makes clear the need to consider the ethical and societal implications of using LLMs, as is made clear within the publication itself.

We believe the entire AI community — academic researchers, civil society, policymakers, and industry — must work together to develop clear guidelines around responsible AI in general and responsible large language models in particular, given their centrality in many downstream language applications.

Along with the release of a suite of LLMs, the OPT library comes with pre-training code and detailed log books that implement and document the training process. As such, invaluable insights into LLM training are provided, allowing the process to be efficiently replicated by others. This transparency into the LLM training process also reveals the significant cost and difficulty of training such models by highlighting the various hardware failures and mid-flight changes that are required to obtain a high-performing model. For these reasons, the release of the OPT library is a true step towards improved transparency and general understanding in large language modeling and provides significant benefit to the research community as a whole.

Conclusion

Thanks so much for reading this post! I hope you found it to be helpful and insightful. If you have any feedback on the post, feel free to leave a comment or connect with me on LinkedIn or Twitter. This post can also be accessed on my personal blog. To keep up with my future blog posts and other works you can sign up to receive e-mail notifications here or visit my personal webpage. This post was completed as part of my research and learning as a Research Scientist at Alegion, a data annotation platform with industry-leading video and computer vision annotation capabilities.

Bibliography

[1] Zhang, Susan, et al. “OPT: Open Pre-trained Transformer Language Models.” arXiv preprint arXiv:2205.01068 (2022).

[2] Brown, Tom, et al. “Language models are few-shot learners.” Advances in neural information processing systems 33 (2020): 1877–1901.

[3] Smith, Shaden, et al. “Using deepspeed and megatron to train megatron-turing nlg 530b, a large-scale generative language model.” arXiv preprint arXiv:2201.11990 (2022).

[4] Sharir, Or, Barak Peleg, and Yoav Shoham. “The cost of training nlp models: A concise overview.” arXiv preprint arXiv:2004.08900 (2020).

[5] Radford, Alec, et al. “Language models are unsupervised multitask learners.” OpenAI blog 1.8 (2019): 9.

[6] Liu, Yinhan, et al. “Roberta: A robustly optimized bert pretraining approach.” arXiv preprint arXiv:1907.11692 (2019).

[7] Gao, Leo, et al. “The pile: An 800gb dataset of diverse text for language modeling.” arXiv preprint arXiv:2101.00027 (2020).

[8] Shoeybi, Mohammad, et al. “Megatron-lm: Training multi-billion parameter language models using model parallelism.” arXiv preprint arXiv:1909.08053 (2019).

[9] Devlin, Jacob, et al. “Bert: Pre-training of deep bidirectional transformers for language understanding.” arXiv preprint arXiv:1810.04805 (2018).

[10] Zellers, Rowan, et al. “HellaSwag: Can a machine really finish your sentence?.” arXiv preprint arXiv:1905.07830 (2019).

[11] Cui, Yiming, et al. “Discriminative sentence modeling for story ending prediction.” Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 34. No. 05. 2020.

[12] Adiwardana, Daniel, et al. “Towards a human-like open-domain chatbot.” arXiv preprint arXiv:2001.09977 (2020).

[13] Thoppilan, Romal, et al. “LaMDA: Language Models for Dialog Applications.” arXiv preprint arXiv:2201.08239 (2022).

[14] Roller, Stephen, et al. “Recipes for building an open-domain chatbot.” arXiv preprint arXiv:2004.13637 (2020).

[15] Chowdhery, Aakanksha, et al. “Palm: Scaling language modeling with pathways.” arXiv preprint arXiv:2204.02311 (2022).